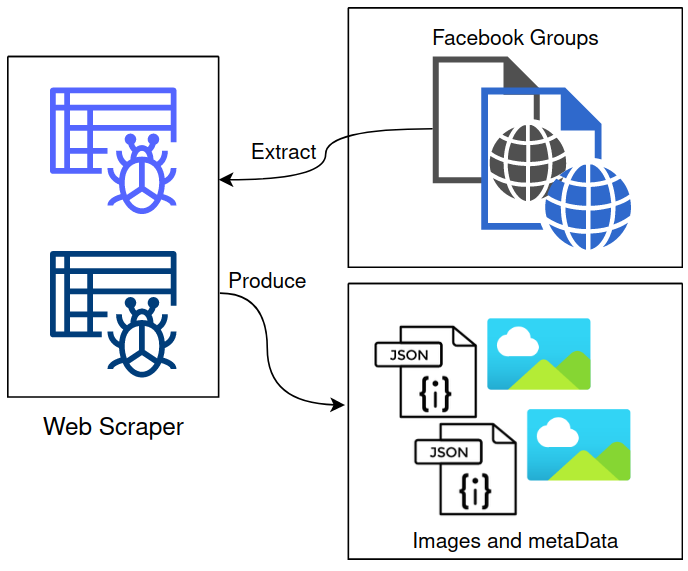

Facebook image Web Scrapper

Picture by julian Schultz

Building Facebook image Web Scrapper to get images for plant disease from facebook groups to us in Deep learning.

Introduction

Many data scientists and data-driven businesses struggle hard to gather data efficiently to enable their businesses and researches. No wonder social media are one of the major sources of data, while many people find a great value in gathering human-related data(like comments, reactions), Our case is a little different, we need images to enable Deep learning.

Many farmers and people use Facebook groups to share their images about crops disease, these groups are a great resource of data to find crop-related images and data.

Farmy.ai is a startup that seeks to develop AI and smart farming solutions to provide farmers with high-quality and rapid diagnoses. The goal is to provide farmers with the right information at the right time so they can make the right decisions. The business essentially relies on the integration of Data and Deep Learning to run its business.

What is Web Scraping?

Web scraping is a process by which we can collect and store data from websites in order to process it and extract valuable information from it. Typically, we scrap data that is not provided through an API, is only publicly available, and is for which we have access permission, otherwise illegal and ethical issues may arise.

Data Scraping can improve data collection. It is a systematic approach to collect large amounts of data. A good investment in data scraping can save a lot of money and resources.

How does it work?

Facebook-Web-scraper That We have built allows for automating of user login, collecting groups posts, processing each post data, and gathering all images(High-resolution images) which is by far the hardest task that took much of the time.

Facebook-Web-scraper uses Puppeteer, An open-source Chrome automation tool by google. The process of scraping posts and getting images consists of many steps, picture blow shows the major step to extract posts data and their images.

We are going to explain those steps briefly

- The first step is to launch the Chrome instance using a command line, this step can work in an authentication mode or without authentication.

- Start the web scraper, needs to prepare some config, and start navigating posts.

- Getting posts includes many steps, like loading posts, checking criteria when to stop the scraper(mainly by date).

- Extract posts data: at this step, we extract dates, links, descriptions, check for images, etc.

- Resolving images: this is the most complicated step since it involves navigating each post and resolving all images in a high-resolution format.

- After resolving all images we shutdown the Chrome instance

- Downloading all images to the web, each image will be saved either to AWS S3 or to a local disk.

- We also save the extracted data either in disks or AWS S3.

Unfortunately, the Facebook Web scraper is private, We love open source but it is something out of my control. Here is a real demo of the web scraper.

Video by Samy OuaretChallenges

Apparently, Web Scraping is a challenging Task especially from A Big company like Facebook that make a tremendous effort to block and track automated scrapers and bots (See link), in most cases the process is the same, but tools may be different Since different Website deploy many defensive tools and mechanisms to discover and block web scrapers.

Some of the main challenges in web scraping is preventing getting blocked by websites, Getting the complete and asserting right data and not some garbage data, knowing when to stop the scraper and some other considerations like:

- Keeping the Memory usage as low as possible.

- byPassing rate limiting, and implementing IP rotating.

- Getting a high resolution(images have many versions).

- Implementing a Retry logic, fail the scraper, and start again.

Conclusion

Implementing web scrapers could be much complex since there are many cases to fail due to the extensive network calls, the website counter-measures, Getting the right data, All these factors are real considerations when implementing a web scraper.